In this new article series, we focus on working with LLMs to scale your SEO tasks, and we hope to help you integrate AI into SEO to level up your skills.

I hope you enjoyed the last article and understood what vectors, vector distance and text embeddings are.

Now it’s time to flex your “AI knowledge muscles” by learning how to find keyword cannibalization using text embeddings.

We start with OpenAI’s text embeddings and compare them.

| model | dimension | price | Note |

|---|---|---|---|

| Text Embedding – ada-002 | 1536 | $0.10 per 1 million tokens | Perfect for most use cases. |

| Text Embedding 3 Small | 1536 | $0.002 per 1 million tokens | Faster and cheaper, but less accurate |

| The three major text embeddings | 3072 | $0.13 per 1 million tokens | More accurate but slower for complex and long text-related tasks |

(*Tokens can be thought of as words.)

But before you begin, you will need to install Python and Jupyter on your computer.

Jupyter is a web-based tool for professionals and researchers to perform complex data analysis and develop machine learning models using the programming language of their choice.

Don’t worry, the installation is very easy and will take very little time to complete, and remember that ChatGPT is on your side when it comes to programming.

In a nutshell:

- Download and install Python.

- Open the command line on Windows or Terminal on Mac.

- Enter this command

pip install jupyterlabandpip install notebook - Run Jupiter with the following command:

jupyter lab

Use Jupyter to experiment with text embeddings and see how fun they are to work with.

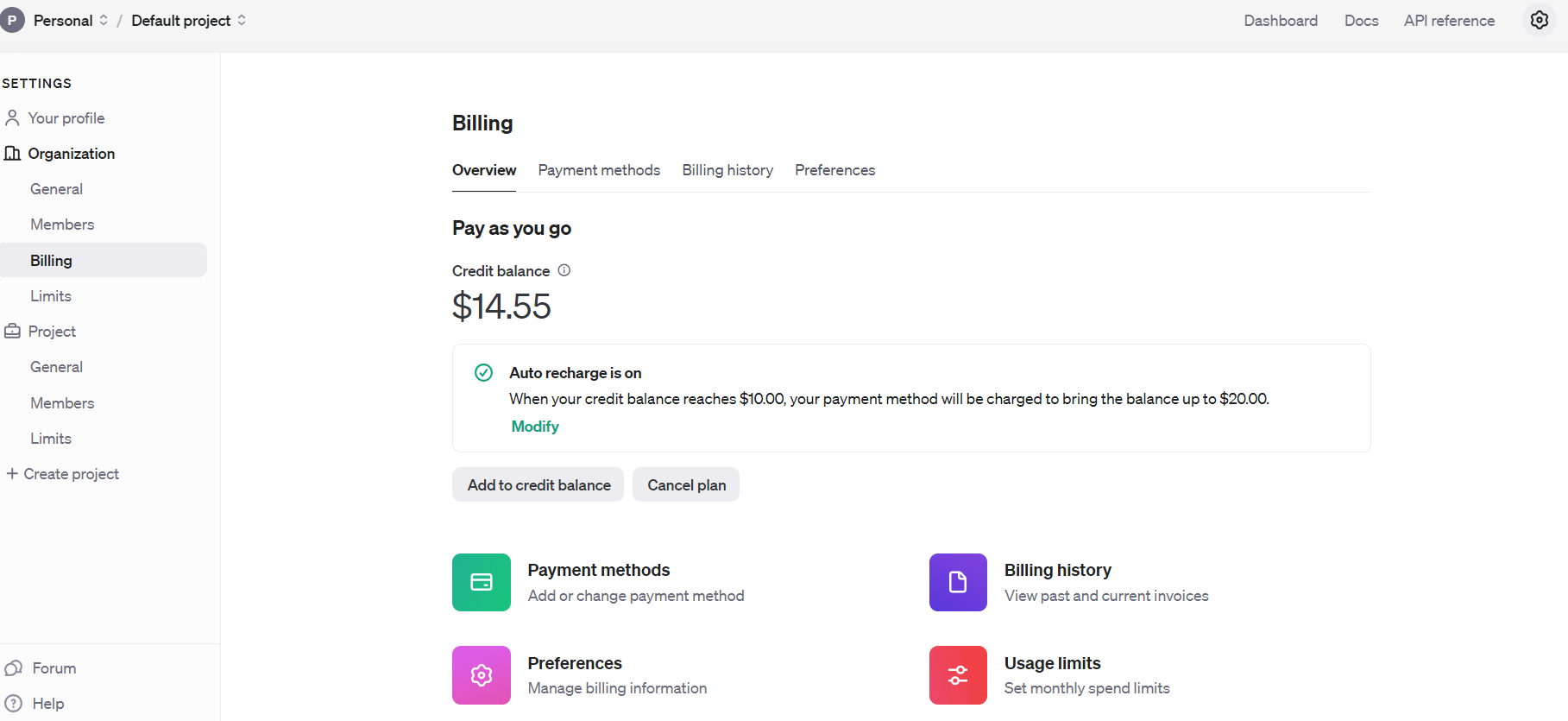

Before you get started, though, you’ll need to sign up for OpenAI’s API, deposit your balance, and set up billing.

Open AI API billing settings

Open AI API billing settingsOnce you’ve done that, set up email notifications to let you know when your spending exceeds a certain amount. Usage restrictions.

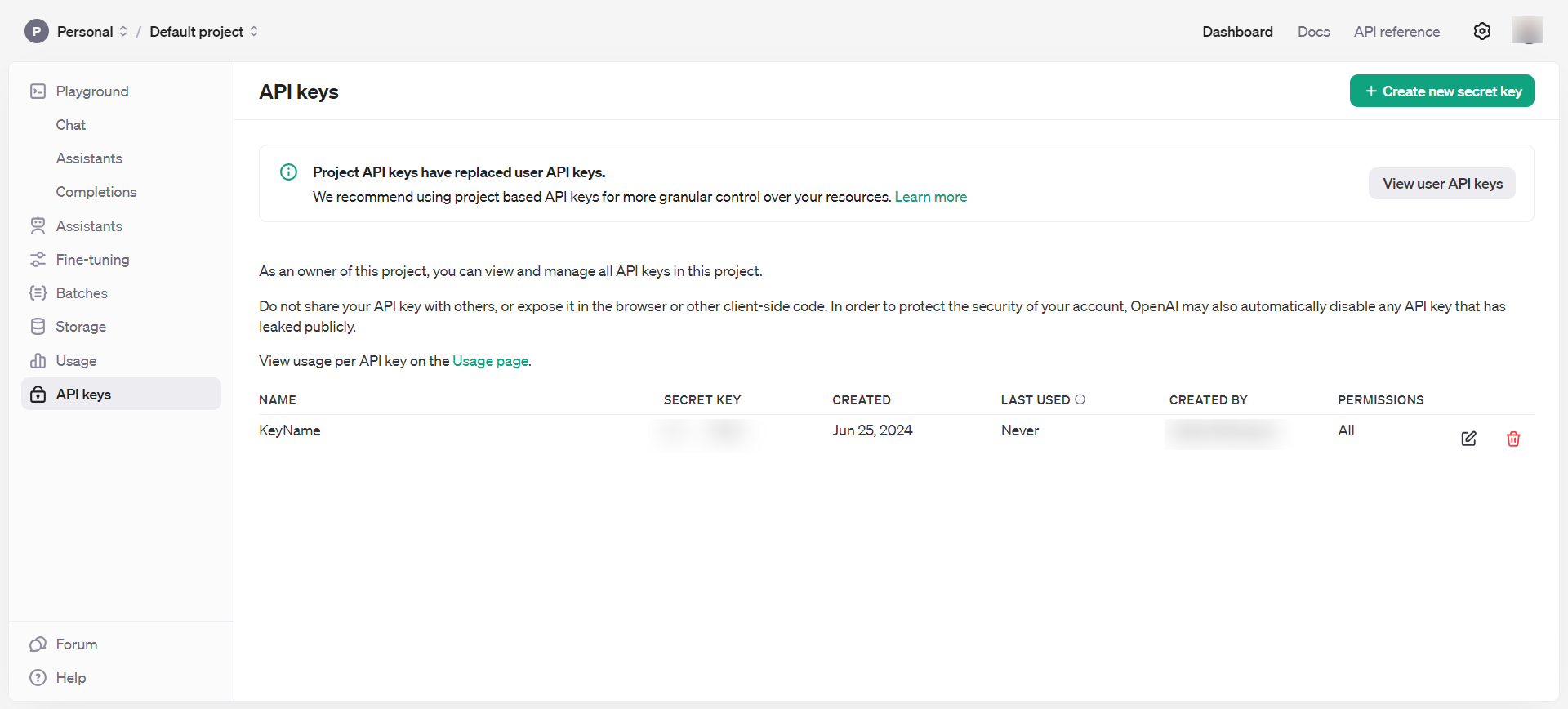

Next, get your API key. Dashboard > API key, Keep these private and never make them public.

OpenAI API key

OpenAI API keyNow you have all the tools you need to start embedding.

- Open a command terminal on your computer and type:

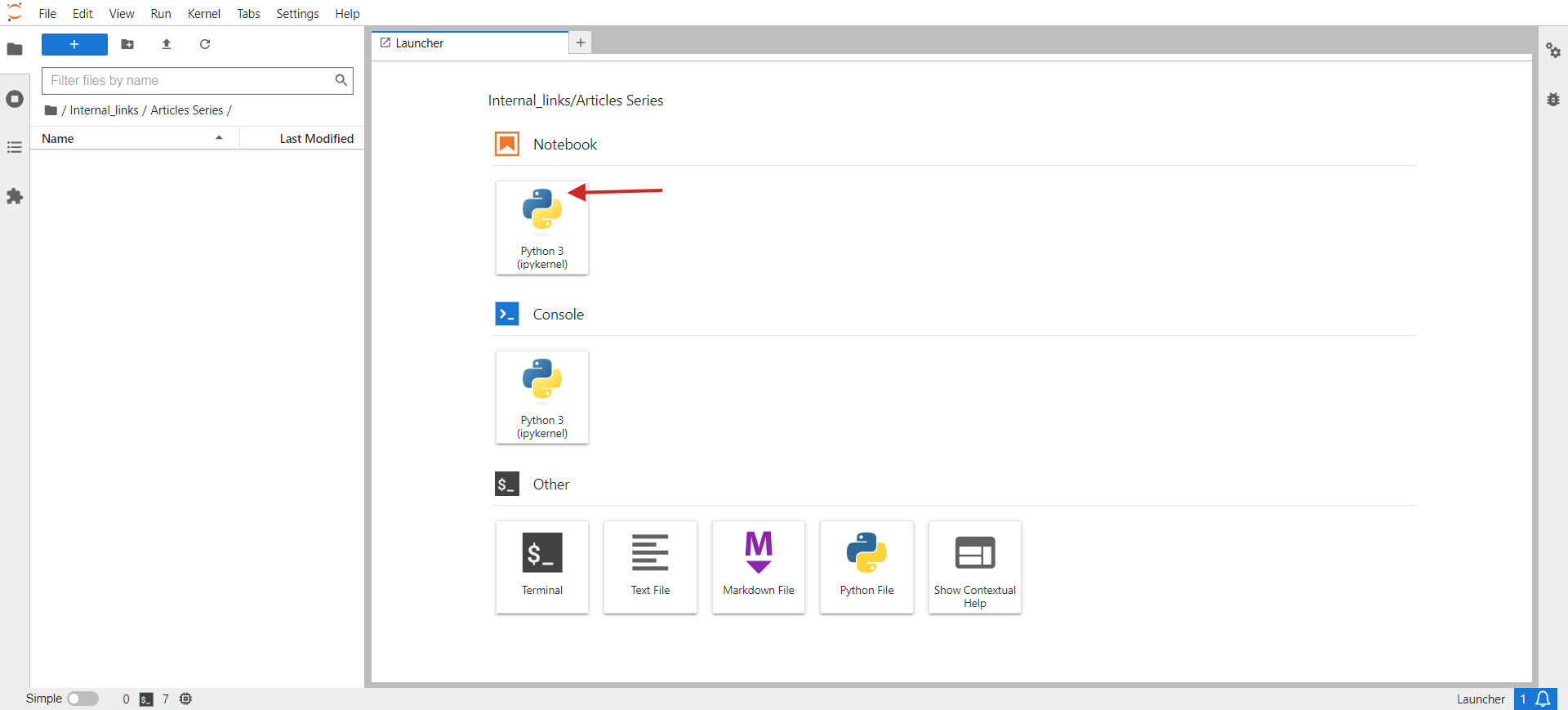

jupyter lab. - You will see a popup in your browser that looks something like the image below.

- click About Python3 under Note.

Jupiter Lab

Jupiter LabIn the window that opens, write your code.

As a small task, let’s group similar URLs from a CSV. The sample CSV has two columns: URL and Title. The task of the script is to group semantically similar URLs based on their title and merge those pages into one, fixing the keyword cannibalization issue.

Here are the steps you need to follow:

In a terminal (or Jupyter notebook) on your PC, run the following commands to install the required Python libraries:

pip install pandas openai scikit-learn numpy unidecode

The ‘openai’ library is required to interact with the OpenAI API to get the embeddings, and ‘pandas’ is used to handle data manipulation and CSV file manipulation.

The `scikit-learn` library is required to calculate cosine similarity, and `numpy` is essential for numerical operations and array processing. Finally, we use unidecode to clean up the text.

Next, download the example sheet as a CSV, rename the file to pages.csv, and upload it to the Jupyter folder where the script is located.

Set the OpenAI API key to the key obtained in the step above, then copy and paste the following code into your notebook.

Click the play triangle icon at the top of the notebook to run the code.

import pandas as pd

import openai

from sklearn.metrics.pairwise import cosine_similarity

import numpy as np

import csv

from unidecode import unidecode

# Function to clean text

def clean_text(text: str) -> str:

# First, replace known problematic characters with their correct equivalents

replacements = {

'–': '–', # en dash

'’': '’', # right single quotation mark

'“': '“', # left double quotation mark

'â€': '”', # right double quotation mark

'‘': '‘', # left single quotation mark

'â€': '—' # em dash

}

for old, new in replacements.items():

text = text.replace(old, new)

# Then, use unidecode to transliterate any remaining problematic Unicode characters

text = unidecode(text)

return text

# Load the CSV file with UTF-8 encoding from root folder of Jupiter project folder

df = pd.read_csv('pages.csv', encoding='utf-8')

# Clean the 'Title' column to remove unwanted symbols

df['Title'] = df['Title'].apply(clean_text)

# Set your OpenAI API key

openai.api_key = 'your-api-key-goes-here'

# Function to get embeddings

def get_embedding(text):

response = openai.Embedding.create(input=[text], engine="text-embedding-ada-002")

return response['data'][0]['embedding']

# Generate embeddings for all titles

df['embedding'] = df['Title'].apply(get_embedding)

# Create a matrix of embeddings

embedding_matrix = np.vstack(df['embedding'].values)

# Compute cosine similarity matrix

similarity_matrix = cosine_similarity(embedding_matrix)

# Define similarity threshold

similarity_threshold = 0.9 # since threshold is 0.1 for dissimilarity

# Create a list to store groups

groups = []

# Keep track of visited indices

visited = set()

# Group similar titles based on the similarity matrix

for i in range(len(similarity_matrix)):

if i not in visited:

# Find all similar titles

similar_indices = np.where(similarity_matrix[i] >= similarity_threshold)[0]

# Log comparisons

print(f"\nChecking similarity for '{df.iloc[i]['Title']}' (Index {i}):")

print("-" * 50)

for j in range(len(similarity_matrix)):

if i != j: # Ensure that a title is not compared with itself

similarity_value = similarity_matrix[i, j]

comparison_result="greater" if similarity_value >= similarity_threshold else 'less'

print(f"Compared with '{df.iloc[j]['Title']}' (Index {j}): similarity = {similarity_value:.4f} ({comparison_result} than threshold)")

# Add these indices to visited

visited.update(similar_indices)

# Add the group to the list

group = df.iloc[similar_indices][['URL', 'Title']].to_dict('records')

groups.append(group)

print(f"\nFormed Group {len(groups)}:")

for item in group:

print(f" - URL: {item['URL']}, Title: {item['Title']}")

# Check if groups were created

if not groups:

print("No groups were created.")

# Define the output CSV file

output_file="grouped_pages.csv"

# Write the results to the CSV file with UTF-8 encoding

with open(output_file, 'w', newline="", encoding='utf-8') as csvfile:

fieldnames = ['Group', 'URL', 'Title']

writer = csv.DictWriter(csvfile, fieldnames=fieldnames)

writer.writeheader()

for group_index, group in enumerate(groups, start=1):

for page in group:

cleaned_title = clean_text(page['Title']) # Ensure no unwanted symbols in the output

writer.writerow({'Group': group_index, 'URL': page['URL'], 'Title': cleaned_title})

print(f"Writing Group {group_index}, URL: {page['URL']}, Title: {cleaned_title}")

print(f"Output written to {output_file}")

This code reads a CSV file “pages.csv” that contains the titles and URLs. This file can easily be exported from a CMS or retrieved by crawling a client’s website using Screaming Frog.

We then remove non-UTF characters from the titles, generate embedding vectors for each title using OpenAI’s API, calculate the similarity between titles, group similar titles, and write the grouped results to a new CSV file, “grouped_pages.csv”.

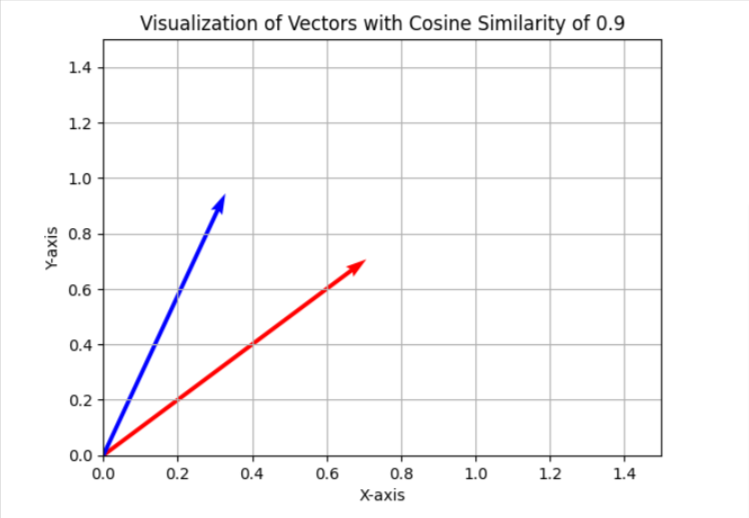

The keyword cannibalization task uses a similarity threshold of 0.9, meaning that articles are considered different if their cosine similarity is less than 0.9, which can be visualized in a simplified 2D space as two vectors with an angle of about 25 degrees.

In your case, I would recommend using a different threshold, say 0.85 (about 31 degrees difference between the two), running it on a sample of your data, and evaluating the results and the quality of the overall match. If the results are not satisfactory, you can increase the threshold to be stricter and therefore more accurate.

You can install ‘matplotlib’ via terminal.

Also, in a separate Jupyter notebook, use the following Python code to create your own visualization of cosine similarity in 2D space. Feel free to try it out – it’s fun!

import matplotlib.pyplot as plt

import numpy as np

# Define the angle for cosine similarity of 0.9. Change here to your desired value.

theta = np.arccos(0.9)

# Define the vectors

u = np.array([1, 0])

v = np.array([np.cos(theta), np.sin(theta)])

# Define the 45 degree rotation matrix

rotation_matrix = np.array([

[np.cos(np.pi/4), -np.sin(np.pi/4)],

[np.sin(np.pi/4), np.cos(np.pi/4)]

])

# Apply the rotation to both vectors

u_rotated = np.dot(rotation_matrix, u)

v_rotated = np.dot(rotation_matrix, v)

# Plotting the vectors

plt.figure()

plt.quiver(0, 0, u_rotated[0], u_rotated[1], angles="xy", scale_units="xy", scale=1, color="r")

plt.quiver(0, 0, v_rotated[0], v_rotated[1], angles="xy", scale_units="xy", scale=1, color="b")

# Setting the plot limits to only positive ranges

plt.xlim(0, 1.5)

plt.ylim(0, 1.5)

# Adding labels and grid

plt.xlabel('X-axis')

plt.ylabel('Y-axis')

plt.grid(True)

plt.title('Visualization of Vectors with Cosine Similarity of 0.9')

# Show the plot

plt.show()

I usually use 0.9 or higher to identify keyword cannibalization issues, but if you’re dealing with redirects for older articles you may need to set it to 0.5 since the older article may not have a newer, but partially similar, near-identical article.

In the case of redirects, in addition to the title, it may be a good idea to concatenate the meta description with the title.

So it depends on the task you are performing. We will discuss how to implement redirection later in another article in this series.

Let’s now look at the results of the three models above and see how they were able to identify nearby articles from our data sample of Search Engine Journal articles.

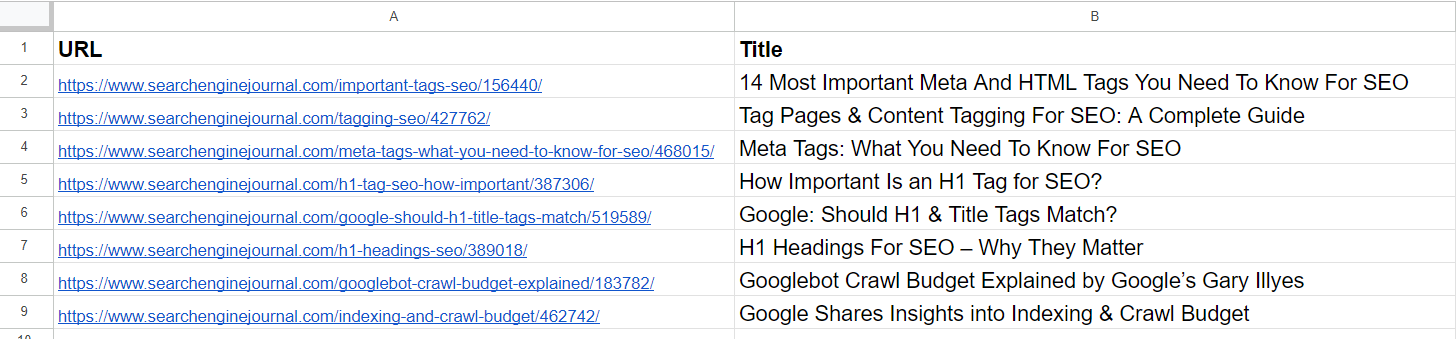

Data sample

Data sampleFrom the list, we can already see that the second and fourth articles cover the same topic on “Meta Tags”. The articles in the fifth and seventh rows are almost the same in that they discuss the importance of H1 tags in SEO and can be combined.

The article in the third row has no similarities to any of the articles in the list, but it does contain common words such as “tags” and “SEO.”

The article in line 6 is also about H1s, but it doesn’t have exactly the same importance as H1s in SEO. Instead, it represents Google’s opinion on whether they should be matched or not.

The articles in lines 8 and 9 are very close, but still different, so they can be combined.

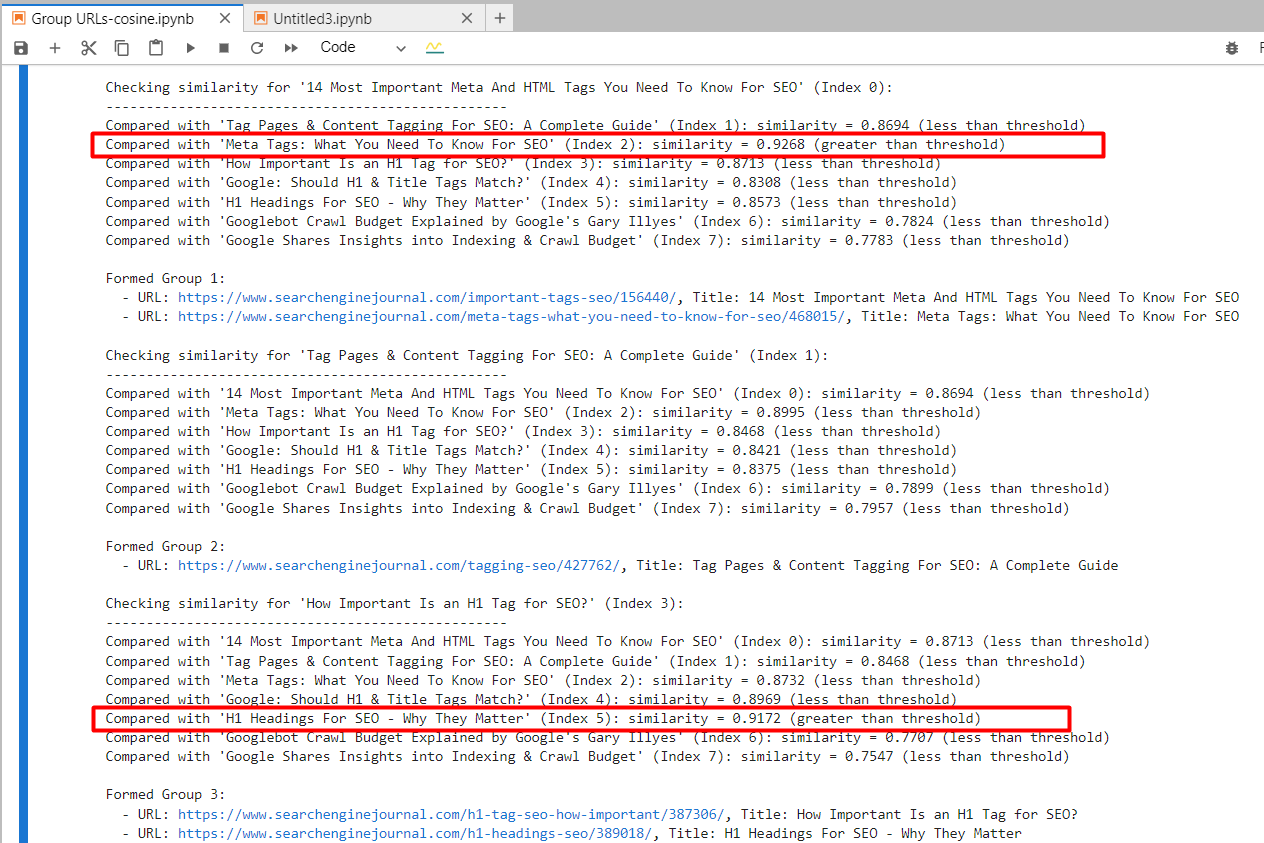

Text Embedding – ada-002

By using “text-embedding-ada-002”, we accurately found the second and fourth articles with a cosine similarity of 0.92, and the fifth and seventh articles with a similarity of 0.91.

Screenshot of Jupyter log showing cosine similarity

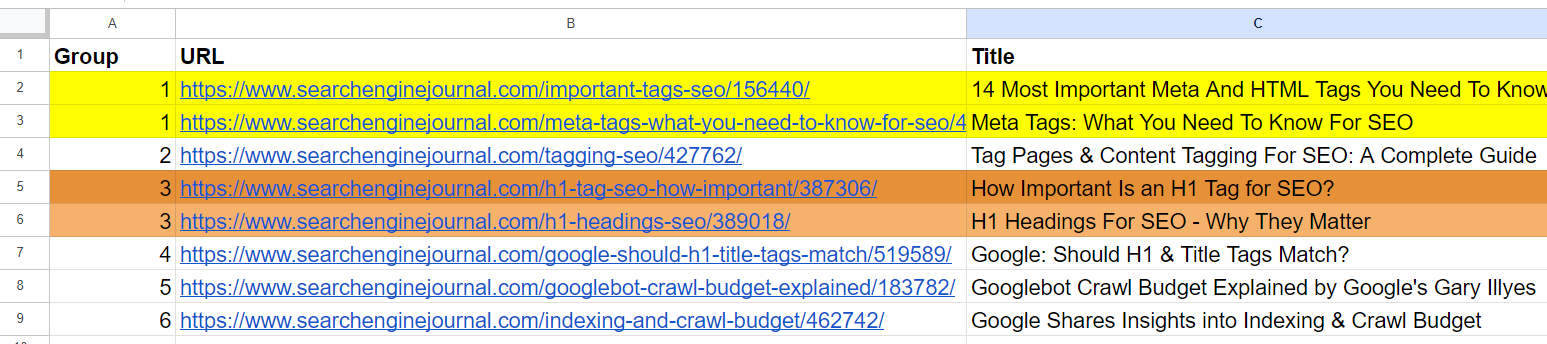

Screenshot of Jupyter log showing cosine similarityWe also generated an output with grouped URLs, using the same group number for similar articles (colors were applied manually for visualization purposes).

Output sheet containing grouped URLs

Output sheet containing grouped URLsThe second and third articles have the words “tags” and “SEO” in common, but are not related. The cosine similarity was 0.86. This shows why a higher similarity threshold of 0.9 or higher is necessary. Setting it to 0.85 would result in a lot of false positives and may suggest merging unrelated articles.

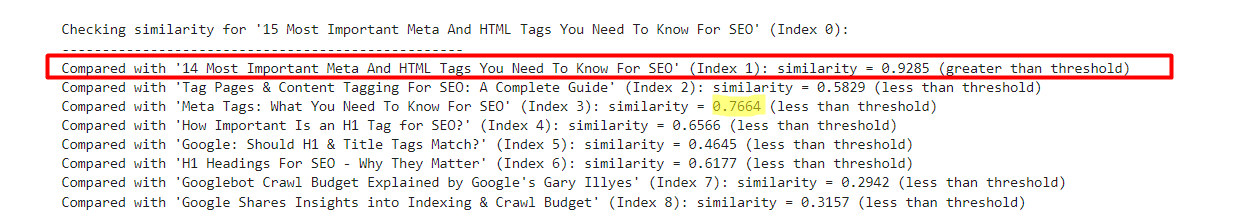

Text Embedding 3 Small

Using ‘text-embedding-3-small’, surprisingly no matches were found above a similarity threshold of 0.9.

For the second and fourth articles, the cosine similarity was 0.76, and for the fifth and seventh articles, the similarity was 0.77.

To better understand this model through experimentation, we added slightly altered versions of “15” and “14” in the first line to the sample.

- “The 14 Most Important Meta and HTML Tags to Know for SEO”

- “The 15 Most Important Meta and HTML Tags to Know for SEO”

Example showing the results of text-embedding-3-small

Example showing the results of text-embedding-3-smallIn contrast, “text-embedding-ada-002” showed a cosine similarity of 0.98 between these versions.

| Title 1 | Title 2 | Cosine similarity |

| The 14 Most Important Meta and HTML Tags You Need to Know for SEO | 15 The most important meta and HTML tags to know for SEO | 0.92 |

| The 14 Most Important Meta and HTML Tags You Need to Know for SEO | Meta Tags: What You Need to Know for SEO | 0.76 |

Here we can see that this model is not very suitable for comparing titles.

The three major text embeddings

The dimensionality of this model is 3072, twice that of “text-embedding-3-small” and “text-embedding-ada-002”, which have dimensions of 1536.

Because it has more dimensions than other models, it is expected to capture meaning with greater accuracy.

However, the cosine similarity between the second and fourth articles was 0.70, and between the fifth and seventh articles was 0.75.

I tested it again with a slightly different version of the first article, adding “15” and “14” to the title and omitting “Most Important.”

- “The 14 Most Important Meta and HTML Tags to Know for SEO”

- “The 15 Most Important Meta and HTML Tags to Know for SEO”

- “14 Meta and HTML Tags You Need to Know for SEO”

| Title 1 | Title 2 | Cosine similarity |

| The 14 Most Important Meta and HTML Tags You Need to Know for SEO | 15 The most important meta and HTML tags to know for SEO | 0.95 |

| The 14 Most Important Meta and HTML Tags You Need to Know for SEO | 14 |

0.93 |

| The 14 Most Important Meta and HTML Tags You Need to Know for SEO | Meta Tags: What You Need to Know for SEO | 0.70 |

| The 15 Most Important Meta and HTML Tags You Need to Know for SEO | 14 |

0.86 |

Therefore, when calculating the cosine similarity between the titles, we can see that “text-embedding-3-large” performs worse compared to “text-embedding-ada-002”.

Note that the accuracy of ‘text-embedding-3-large’ increases with the length of the text, but overall ‘text-embedding-ada-002’ performs better.

Another approach would be to remove stop words from the text, which may help focus the embeddings on more meaningful words, potentially improving accuracy in tasks like computing similarity.

The best way to determine whether removing stop words improves accuracy for a particular task and dataset is to experimentally test both approaches and compare the results.

Conclusion

In these examples, you’ll learn how to use OpenAI’s embedding models to perform a wide range of tasks.

For similarity thresholds, you should experiment with your own dataset and see which thresholds are appropriate for your particular task by running them on smaller samples of data and having humans review the output.

Note that the code in this post is not optimal for large datasets because it needs to create a text embedding for the article and compare it with other rows every time there is a change in the dataset.

To be efficient, you should use a vector database to store the generated embedding information. We’ll show you how to use a vector database later, but for now we’ll modify the code sample to use a vector database.

Additional resources:

Featured Image: BestForBest/Shutterstock